How to Use Ollama | Create Private AI Apps | Part 1: Installation & Setup

- Brock Daily

- Jan 7

- 3 min read

As AI models grow in power and capability, so does the concern for privacy and security. Many AI applications today require cloud-based APIs, which introduce data privacy risks. However, Ollama enables you to run large language models (LLMs) entirely on your local machine, giving you complete control over your AI apps.

In Part 1 (this guide), we’ll walk you through:

1.) Why Use Ollama for Private AI

2.) Installing Ollama on your system

3.) Running AI models privately

In Part 2, we'll focus on:

1.) Using Ollama’s API to integrate AI into your applications

2.) Customizing AI models for specialized use cases

3.) Basic GUI (User Interface) design and layout for chatbots

By the end, you’ll have a fully functional self-hosted AI system, capable of running advanced AI applications without internet dependency.

1. Why Use Ollama for Private AI?

Ollama has quickly become a leading solution for privacy-focused AI, enabling users to run large language models (LLMs) locally without relying on cloud-based services. Unlike OpenAI’s GPT-4 or Anthropic’s Claude, Ollama ensures on-device AI inference, keeping all data private and secure.

Ollama Features:

• Privacy & Security – No external API calls, ensuring complete data control.

• Optimized Performance – Runs efficiently on consumer hardware with GPU acceleration.

• Offline AI – No internet required, making it ideal for air-gapped environments.

• Custom AI Models – Fine-tune, modify, or create AI models tailored to specific needs.

• Scalable – Works across laptops, edge devices, and enterprise servers.

Ollama Backstory:

• Founded in San Francisco in 2023 by Michael Chiang and Jeffrey Morgan

• Rapid adoption across developer and AI communities

• Expanded support for models like Llama 3, Mistral, and Code Llama

• Integrated with Docker for enterprise AI deployment

• Contributing to an open-source ecosystem for AI innovation

Ollama is redefining AI deployment by prioritizing privacy, performance, and flexibility. Whether for independent developers, security-conscious organizations, or enterprise applications, it provides a powerful framework for running AI entirely on local infrastructure.

2. Installing Ollama

Install on macOS & Linux

Ollama provides a simple script for installing the required components. Open a terminal and run:

curl -fsSL https://ollama.com/install.sh | shVerify Installation

After installation, confirm Ollama is installed by running:

ollama --versionThis should return the installed version number, indicating a successful setup.

Running Ollama on Windows

While Ollama does not natively support Windows, you can run it using WSL2 (Windows Subsystem for Linux). Install WSL2 and then follow the Linux installation instructions.

You may also download Ollama with one-click by visiting the official website:

3. Running AI Models Locally

Pull an AI Model

Ollama allows you to fetch and run open-source models directly on your machine.

For example, to download and run Llama 3.2, use:

ollama pull llama3.2To see available models, run:

ollama listStart an AI Session

Once downloaded, start interacting with the model:

ollama run llama3.2Now, you can now chat with AI models offline, ensuring complete control over your data while maintaining high-performance inference on your own hardware.

But setting up Ollama is just the beginning.

What’s Next?

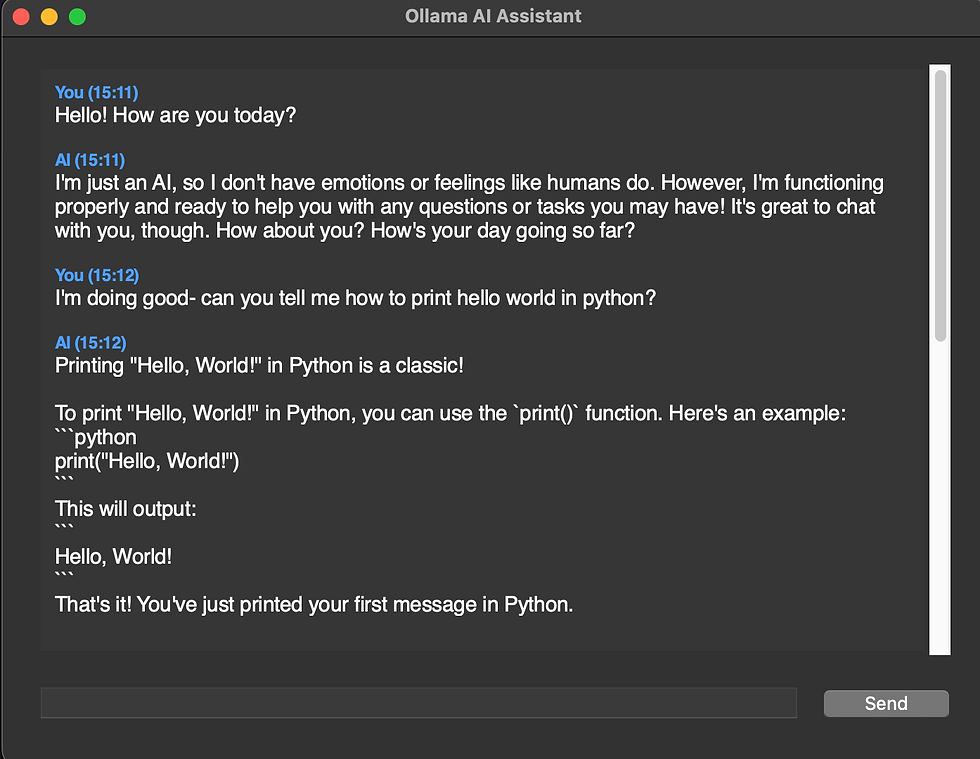

In Part 2, we’ll take things further by exploring how to integrate Ollama into real-world applications like this GUI:

What to Expect in Part 2:

1. Using Ollama’s API to build local AI-powered tools

2. Customizing models to create AI systems tailored to your needs

3. Designing a basic GUI for chatbot interfaces

With these next steps, you’ll be able to move beyond basic AI interactions and start building fully functional AI applications—all running securely on your own infrastructure.

🔗 Continue to Part II: Advanced Ollama API, Model Customization & GUI Development → Click Here

Comments